Research highlights¶

Below are some highlights of the research facilitated by the Hoffman2 Cluster at UCLA. We proudly support a diverse and vibrant research community that encompasses all latitutes of our campus.

Tip

If you are interested in sharing your research highlights and how the Hoffman2 Cluster is contributing to it, please contact us.

Biostatistics¶

G.O. Discovery Lab, The Collaboratory et al. - Expression of Stromal Progesterone Receptor and Differential Methylation Patterns in the Endometrium May Correlate with Response to Progesterone Therapy in Endometrial Complex Atypical Hyperplasia¶

Research Groups: G.O. Discovery Lab, The Collaboratory et al.

Principal Investigator: Sanaz Memarzadeh

Affiliations: Department of Obstetrics and Gynecology, David Geffen School of Medicine, UCLA; UCLA Eli and Edythe Broad Center of Regenerative Medicine and Stem Cell Research; University of California San Francisco School of Medicine; Department of Molecular, Cell and Developmental Biology, UCLA; Institute for Genomics and Proteomics, UCLA; Institute for Quantitative and Computational Biology—The Collaboratory, UCLA; Department of Obstetrics and Gynecology, Southern California Permanente Medical Group; Department of Pathology and Laboratory Medicine, David Geffen School of Medicine, UCLA; Molecular Biology Institute, UCLA; The VA Greater Los Angeles Healthcare System

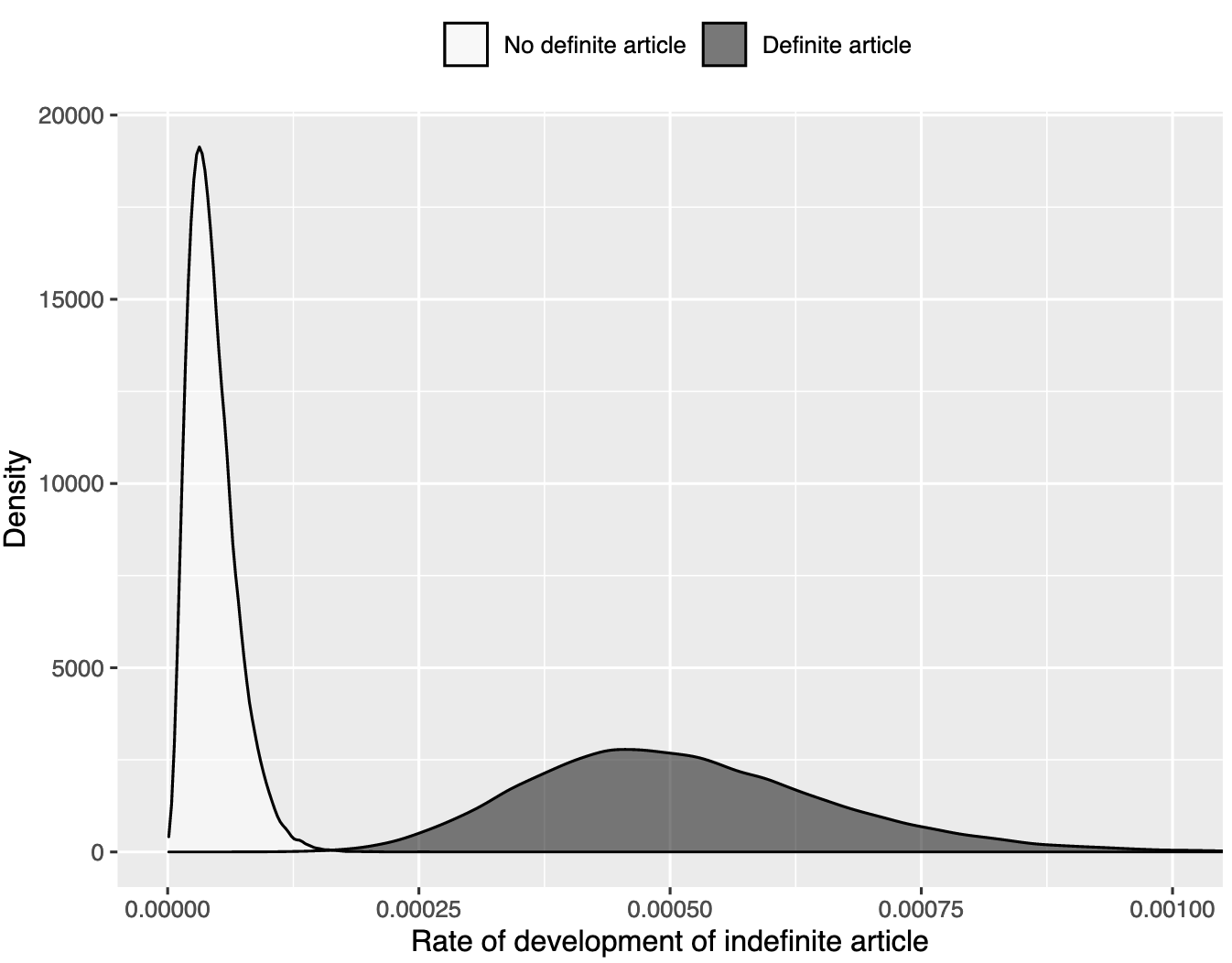

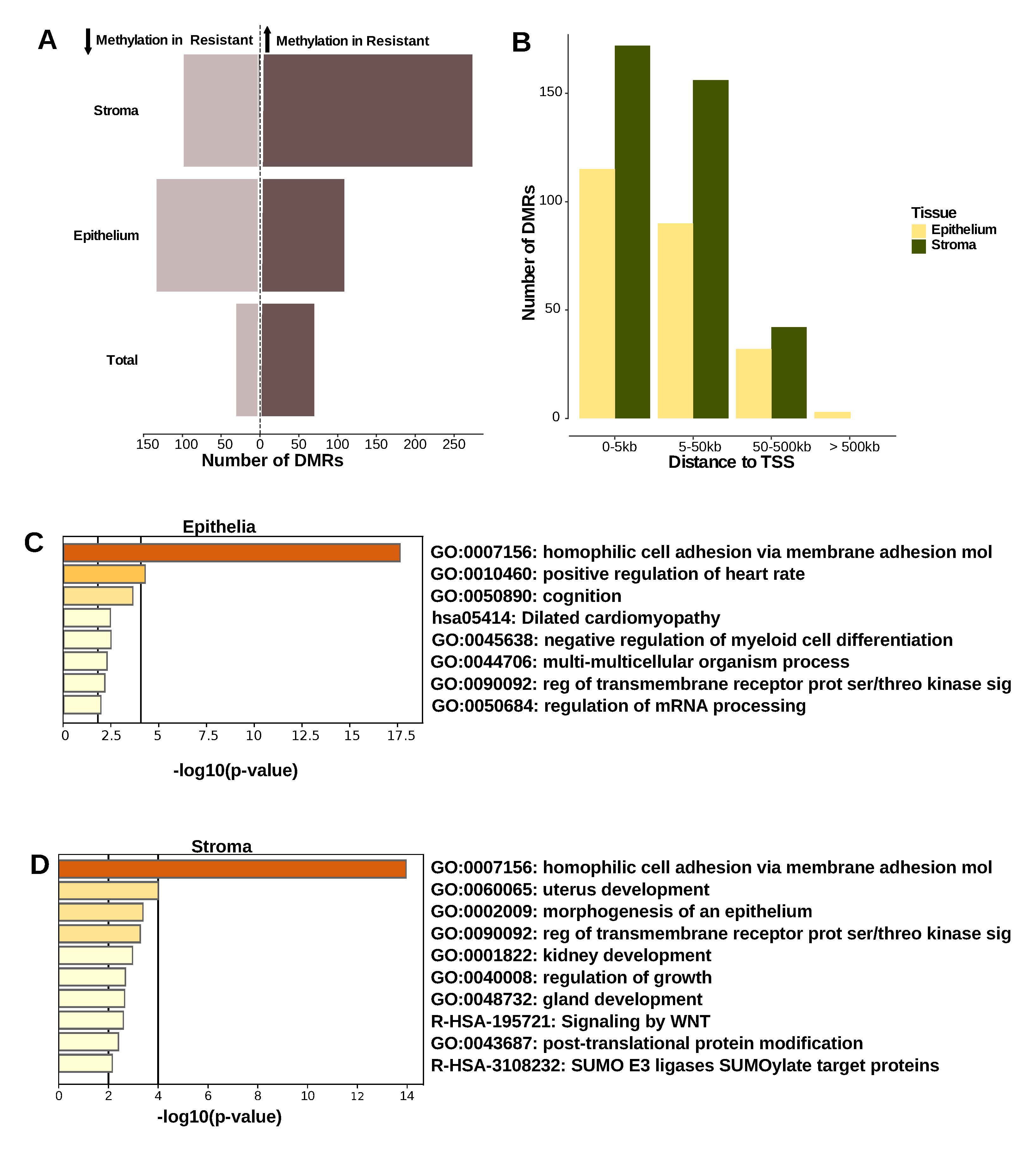

About: "The project was a collaboration between the departments mentioned above, and a part of it was analysis of whole genome bisulfite (WGBS) data from biopsies (stromal and endometrial tissue) of 7 treatment sensitive and 7 treatment resistant patients with complex atypical hyperplasia (CAH) or endometrial adenocarcinoma. The majority of differentially methylated regions (DMRs) between sensitive and resistant patients in both the stroma and epithelia were located proximal to a transcriptional start site (TSS) suggesting they may play a role in gene regulation. GO and pathway enrichment analysis for genes associated with DMRs in the epithelium and stroma were carried out revealing an enrichment for cell adhesion genes." –Anela Tosevska, Collaboratory Fellow

How the Hoffman2 Cluster is facilitating this research: "The data analysed for this project was whole genome bisulfite (WGBS) data, sequenced on Illumina NovaSeq that required a high storage capacity as well as high computational resources (both time and memory). I would not have been able to process the data without using the Hoffman2 Cluster." –Anela Tosevska, Collaboratory Fellow

Software used on the Hoffman2 Cluster: Python v3.7.0, R v3.5.1, SAMtools, GATK

Reference: Neal, A.S., Nunez, M., Lai, T. et al. Expression of Stromal Progesterone Receptor and Differential Methylation Patterns in the Endometrium May Correlate with Response to Progesterone Therapy in Endometrial Complex Atypical Hyperplasia. Reprod. Sci. 27, 1778–1790 (2020). https://doi.org/10.1007/s43032-020-00175-w

"Differentially methylated regions in the stroma and epithelium of resistant vs sensitive patients. a) The number of differentially methylated regions (DMRs) with at least 30% difference in DNA methylation in resistant vs sensitive patients. The number of regions with decreased methylation in resistant samples is depicted to the left side of the dotted line (light brown), the regions with increased methylation to the right(dark brown). b) Number of DMRs per tissue and their proximity to gene transcription start sites (TSS). c)–d) Gene ontology and pathway enrichment analysis for genes associated with DMRs (proximal to TSS, 5 kb upstream and downstream of the TSS) in epithelium (c) and stroma (d).The negative log of the enrichment p-values are depicted on the x-axes; vertical lines mark arbitrary cut-off p-values at p=0.01 and p= 0.0001. Associated GO terms are depicted on the y-axes" –Neal et al., 2020¶

Economics¶

Xuanyu(Iris) Fu, PhD Student, adviser Dora Costa - Intergenerational Transmission of Wealth Loss: Evidence from the Freedman’s Bank¶

Principal Investigator: This is a solo-authored paper written by Xuanyu(Iris) Fu, a PhD student at the Department of Economics, using the Hoffman2 Cluster under CCPR and advisor Dora Costa.

Academic Adviser: Dora Costa

Affiliation: Department of Economics

About: "This paper provides a comprehensive analysis of the intergenerational effects of negative shock in wealth for African Americans by examining a historical episode, the failure of the Freedman’s Bank (1865-1874). The bank failure resulted in the loss of deposits for approximately 100,000 depositors. I collect and match individual-level bank depositor records to the 1880 and 1900 Censuses for their descendants’ literacy and occupation outcomes. To estimate the causal effect of depositing, I employ an instrumental variable strategy which exploits county-level differences in the take up rate in banking. Children of depositors were 37 percent more likely to be literate than children of non-depositors. This effect is explained by an increase in children’s literacy while the bank was in operation, which outweighs the negative impact of the wealth loss." –Xuanyu(Iris) Fu

How the Hoffman2 Cluster is facilitating this research: "Hoffman2 helped me resolve many computation challenges because it allows me to work efficiently with large datasets (full count censuses). In addition, I was able to speed up my work by utilizing the job scheduler and sending batch jobs." –Xuanyu(Iris) Fu

Software used on the Hoffman2 Cluster: Stata

Presentation - Intergenerational Transmission of Wealth Loss: Evidence From the Freedman’s Bank by Iris Fu, Economics Department, UCLA, Job Market Paper, September 25, 2020

Linguistics¶

David Goldstein - Cascades of change: How domino effects shape language¶

Principal Investigator: David Goldstein

Affiliation: Department of Linguistics & Program in Indo-European Studies

About: "The central focus of my research project is correlations among linguistic changes. If a language develops one property, how does that affect the development of subsequent properties? The initial stage of my project has focused on the relationship between definite and indefinite articles (such as English the and an) among the Indo-European languages. Bayesian phylogenetic methods revealed that the rate at which indefinite articles develop among languages with a definite article (the gray graph) is decidedly faster than the rate at which indefinite articles develop in languages without a definite article (the white graph), which supports the view that the development of a definite article makes that of an indefinite article more likely." –David Goldstein

How the Hoffman2 Cluster is facilitating this research: The Bayesian-MCMC analyses were carried outwith RevBayes version 1.0.13. Posterior distributions of transition rates are estimated with MCMC sampling, which is computationally demanding. Carrying out such analyses on a personal computer is unfeasible and the Hoffman2 Cluster proved essential for carrying out the analyses.

Software used on the Hoffman2 Cluster: RevBayes version 1.0.13

Mechanical and Aerospace Engineering¶

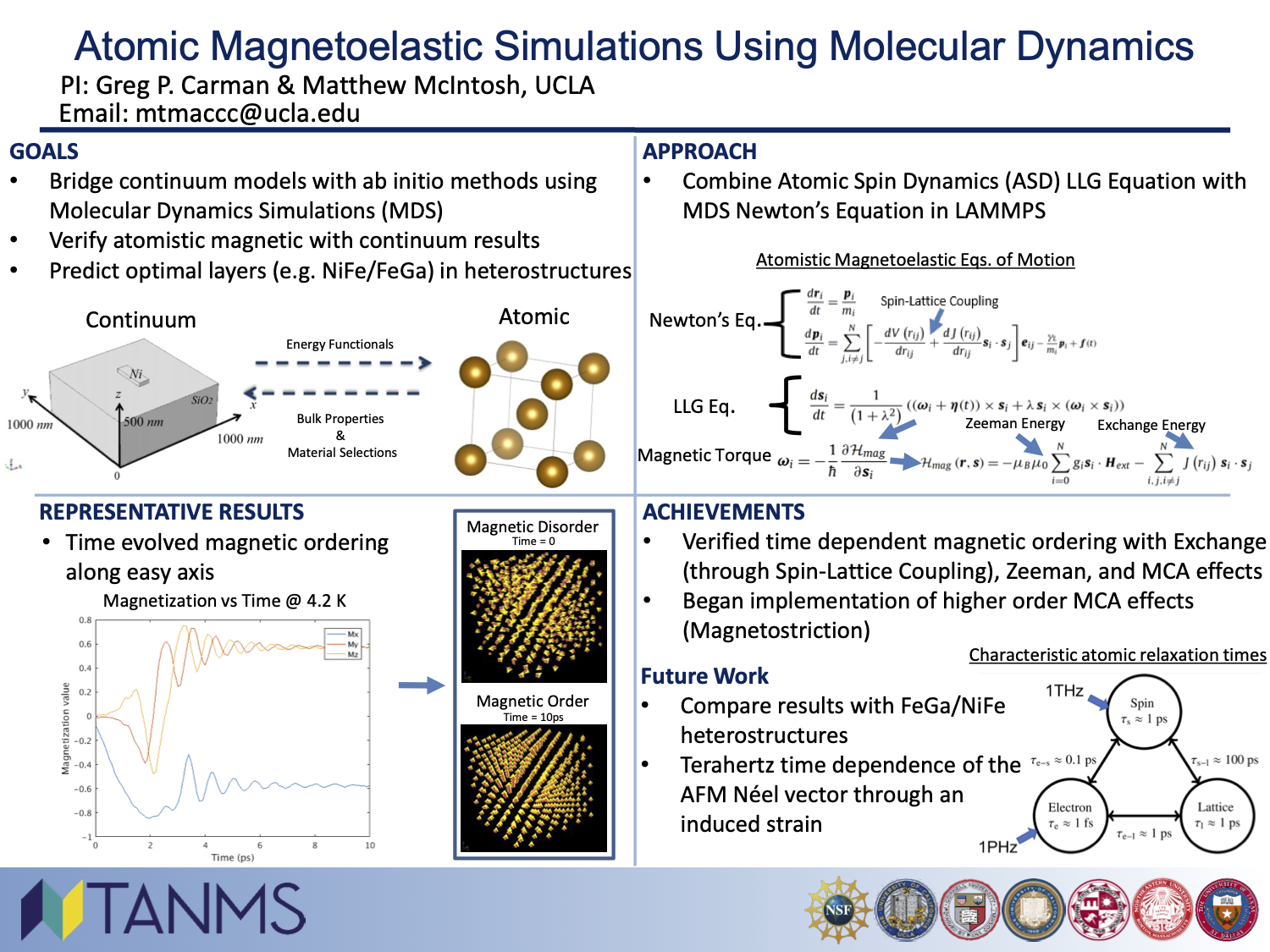

TANMS Modeling Thrust - Atomic Magnetoelastic Simulations Using Molecular Dynamics¶

Research Group: TANMS Modeling Thrust

Principal Investigator: Greg Carman

Graduate Student: Matthew Mcintosh

Affiliation: Mechanical and Aerospace Engineering

About: "Understanding the fundamentals of magnetism and its role in magnetomechanical forces at the atomic level has been limited to using Density Functional Theory (DFT). Specifically, DFT is one of the few characterization tools available that properly characterizes the orbit of an electron at zero degrees kelvin. While useful to comprehend the underlying theory, these computational simulations are often of a very small geometric size (about 110 nm3 at its largest) and, as previously mentioned, performed at zero kelvin. These conditions fail to capture the dynamical nature that thermal fluctuations and long-range order have on the magnetic energies induced and require an alternative computational algorithm. Recently a new method coupling both Atomic Spin Dynamics (ASD) and Molecular Dynamics (MD) has been published which would allow for the better understand of the induced magnetic energies on a larger scale. This method (MD-ASD) would enable the simulation of larger atomic systems such as those present near complex interfaces (e.g. NiFe/FeGa layers) and assist in understand the dynamic magnetic response in these nm thick films with exchange coupled systems." –Matthew Mcintosh

How the Hoffman2 Cluster is facilitating this research: The greatest benefit we receive from computing on the hoffman computing cluster the access of multiple high speed nodes and parallel processing. The end goal of this research is the simulation of interfaces in multi-atom systems, increasing the total number of available processors allows for shorter run times and faster completions. Also, we seek to bridge the gap between continuum and quantum with this atomistic method (i.e. length scales on the order of 100 nm - 1μm). These relatively long length scales would be near impossible to run to completion without multiprocessor parallel computing.

Software used on the Hoffman2 Cluster: LAMMPS

Reference: TANMS

2020 ModelQuad Poster by Greg P. Carman & Matthew McIntosh, UCLA¶

Physics and Astronomy¶

Plasma Simulation Group - Particle-in-Cell and Kinetic Simulation Software Center (PICKSC)¶

Research Group: Plasma Simulation Group

Principal Investigator: Warren Mori

Researchers: Frank Tsung, Ricardo Fonseca, Viktor Decyk, Benjamin Winjum

Affiliation: Department of Physics and Astronomy

About: "PICKSC’s mission is to support an international community of PIC and plasma kinetic software developers, users, and educators; to increase the use of this software for accelerating the rate of scientific discovery; and to be a repository of knowledge and history for PIC. Project website." – PICKSC Group

How the Hoffman2 Cluster is facilitating this research: See PICKSC Research

Software used on the Hoffman2 Cluster: Research software include in house developed production codes OSIRIS, QuickPIC, UPIC-EMMA, and OSHUN

Reference: See PICKSC Publications

Treu Research Group - Strong gravitational lensing puts dark matter theories to the test¶

Research Group: Treu Research Group

Principal Investigator: Tommaso Treu

Graduate Student: Daniel Gilman (PhD, 2020)

Postdoctoral Researcher: Simon Birrer

Affiliation: Department of Physics and Astronomy

About: Strong gravitational lensing provides an elegant and direct means of probing dark matter structure in the Universe. Gravitational lensing refers to the deflection of light by gravitational fields, and strong lensing is a specific case where the deflection field is strong enough to produce multiple images of a single background light source. If the reigning cosmological theory of cold dark matter is correct, countless invisible concentrations of dark matter, or “halos”, should lie between us and a lensed background source. The detection of these halos through their lensing effects would confirm a central prediction of cold dark matter, while a non-detection would demand new physics to explain their absence. We have analyzed eight strongly-lensed quasars with sophisticated models for both the gravitational lensing physics and the dark matter content of the lens systems. Using the computational resources provided by the Hoffman cluster, we generate millions of simulated strong lens systems that include dark matter halos, and use the simulations to infer the abundance of dark matter structure situated between us and the lensed source billions of light years away. We confirm the detection of dark matter structure on halo mass scales of 10^8 solar masses, a regime where these structures would not host stars and therefore evade detection through other observation techniques. Our results rule out entire classes of dark matter theories that predict no structure on this scale, narrowing the range of possible theories of dark matter that can explain the observed structure in the Universe. Gilman et al. 2020

How the Hoffman2 Cluster is facilitating this research:

Software used on the Hoffman2 Cluster: Using the computational resources provided by the Hoffman cluster, we generate millions of simulated strong lens systems that include dark matter halos, and use the simulations to infer the abundance of dark matter structure situated between us and the lensed source billions of light years away.

References: Gilman, Daniel; Birrer, Simon; Nierenberg, Anna; Treu, Tommaso; Du, Xiaolong; Benson, Andrew, Warm dark matter chills out: constraints on the halo mass function and the free-streaming length of dark matter with eight quadruple-image strong gravitational lenses, Monthly Notices of the Royal Astronomical Society, Volume 491, Issue 4, p.6077-6101, 2020 & Hubble Detects Smallest Known Dark Matter Clumps (press release)

Two of the millions of renderings of the dark matter along the line of sight to the lens RXJ0911+055 Gilman et al. 2020¶

Treu Research Group - The most precise measurement of the expansion rate of the Universe from a single gravitational lens system¶

Research Group: Treu Research Group

Principal Investigator: Tommaso Treu

Graduate Student: Anowar Shajib, (PhD, 2020)

Postdoctoral Researcher: Simon Birrer

Affiliation: Department of Physics and Astronomy

About: We measure the expansion rate of the universe by analyzing a strong-gravitationally lensed quasar system. This kind of measurement requires highly precise modeling of the lens system. Our adopted model is optimized by reconstructing a high-resolution image of the lens system and then comparing it with the observed ones from the Hubble Space Telescope. This optimization is a numerically expensive procedure, as it encapsulates a Bayesian inference of the model posterior which requires ~10^7 calls of the likelihood function. The model-based reconstruction is performed using the publicly available software Lenstronomy and the Bayesian inference was performed through the nested sampling algorithm using the publicly available software PyPolychord. Moreover, the optimization is carried out for 24 distinct models to perform Bayesian model averaging based on the model evidence. We implemented the Bayesian model averaging with directly computed model evidence for the first time in lens modeling, which is more robust than previously adopted approximation schemes for model averaging. Due to the demanding nature of this inference procedure requiring ~500K CPU hours, the parallelization provided by Hoffman2 was crucial in completing this project within the timescale of 8 months. From this large computational endeavor, we measure the expansion rate of the universe, known as the Hubble constant, with 3.9% precision, which is the most precise measurement of the Hubble constant to date from a single lens system. Shajib, et al. 2020

How the Hoffman2 Cluster is facilitating this research: Due to the demanding nature of this inference procedure requiring ~500K CPU hours, the parallelization provided by Hoffman2 was crucial in completing this project within the timescale of 8 months.

Software used on the Hoffman2 Cluster: Lenstronomy and PyPolychord

"The figure shows the observed image (left) of the lens system from the Hubble Space Telescope, and our model-based reconstruction (right)." –Shajib, et al. 2020¶

Treu Research Group - Using light echos to map the broad line region and measure black hole masses in seven active galactic nuclei¶

Geometries of the Hβ-emitting BLR [Williams et. al 2018]¶

Research Group: Treu Research Group

Principal Investigator: Tommaso Treu

Graduate Student: Peter Williams

Affiliation: Department of Physics and Astronomy

About: "We use time series of spectra to measure the properties of the gaseous broad line region (BLR) at the centers of distant galaxies and make precise measurements of the central supermassive black hole. The BLR is too small to be resolved with even the most powerful telescopes, so we substitute spatial resolution for time resolution by monitoring the galaxy over multi-month observing campaigns. The light from the BLR responds to fluctuations in the light from the central regions of the galaxy on timescales of a few days. Traditional methods use this time lag paired with the known speed of light to estimate the size of the BLR and obtain a black hole mass, but these measurements are uncertain due to the unknown nature of the BLR. We instead use a forward modeling approach to fit for the structure and kinematics of the BLR and derive precise measurements of the black hole mass." –Treu Research Group

How the Hoffman2 Cluster is facilitating this research: The modeling code was developed by the Treu research group and uses the publicly available diffusive nested sampling code DNest4. The BLR model is described by 22 parameters and it is computationally expensive to explore the complex parameter space, taking ~2 weeks on a high power desktop computer and ~1 week on the Hoffman2 cluster. The Williams et al. (2018) analysis consisted of seven galaxies, each with 3 versions of the data. Including other tests in addition to those published, the modeling code was run over 50 times, so the ability to simultaneously fit models using the Hoffman2 cluster and group computers was critical to finishing the modeling in a reasonable time frame. These results nearly doubled the sample of modeled BLRs and provided black hole mass measurements that are twice as precise as traditional methods.

Software used on the Hoffman2 Cluster: DNest4 and code developed by the Treu research group.

References:

Quantitative Psychology¶

Du Research Group - Testing Changes in Growth Curve Models with Non-normal Data using Permutation¶

Research Group: Du Research Group

Principal Investigator: Han Du

Graduate Student: Stefany Mena

Affiliation: Department of Psychology

About: "The goal of the project was to test a permutation method to make accurate inferences of overall slopes in growth curve models with nonnormal data." –Stefany Mena, graduate student, Du Research Group

How the Hoffman2 Cluster is facilitating this research: Permutation methods in statistics are computationally expensive because each dataset has to be permuted thousands of times. The Hoffman2 Cluster allowed us to simulate, permute and analyze hundreds of datasets from multiple conditions within a reasonable window of time.

Software used on the Hoffman2 Cluster: R

IMPS Poster on “Testing Changes in Growth Curve Models with Non-normal Data Using Permutation”¶

Du Research Group - Novel Imputation Method for Scale Scores¶

Research Group: Du Research Group

Principal Investigator: Han Du

Graduate Student: Egamaria Alacam

Affiliation: Psychology Department

About: "Scale scores are predominantly used by researchers in questionnaires, and often time each scale will have missing items. This project assesses a novel imputation method for scale scores and compares it to current traditional imputation routines used. Based on our results, our novel method performs better than the traditional imputation routine when there is a large amount of scales present as well as when sample size is small and missingness rate is high. " – Egamaria Alacam, graduate student Du Research Group

How the Hoffman2 Cluster is facilitating this research: Using the Hoffman2 Cluster I was able to run multiple replications at once, instead of having my computer run one replication per condition at a time. I had 54 different conditions and had to run 1000 replications per each condition. So, the Hoffman2 Cluster helped to obtain results much more quickly and efficiently than I would have using only my one computer. I would have needed 54,000 computers to match the swiftness of the Hoffman2 Cluster.

Software used on the Hoffman2 Cluster: R, blimp, mplus

"Novel Imputation Method for Scale Scores (Slide 1)" – By Egamaria Alacam, Han Du, & Craig Enders¶

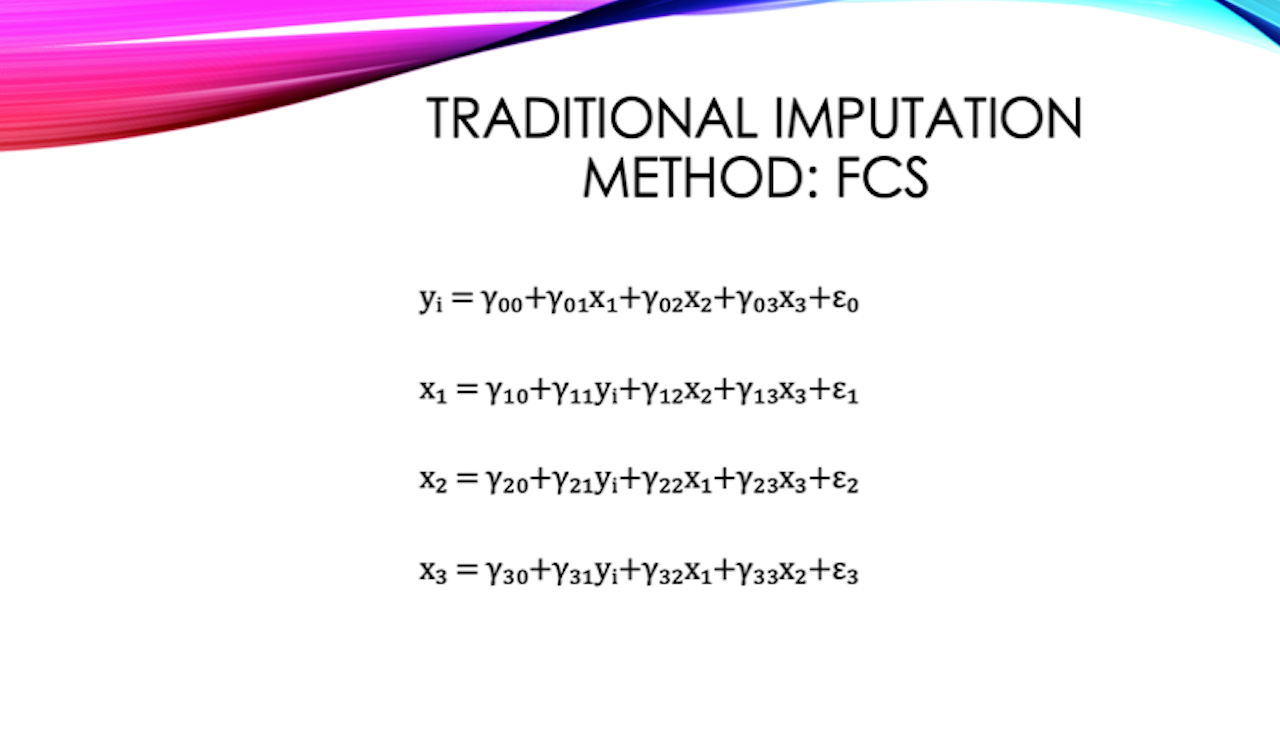

"Novel Imputation Method for Scale Scores (Slide 2)" – By Egamaria Alacam, Han Du, & Craig Enders¶

"Novel Imputation Method for Scale Scores (Slide 3)" – By Egamaria Alacam, Han Du, & Craig Enders¶

"Novel Imputation Method for Scale Scores (Slide 4)" – By Egamaria Alacam, Han Du, & Craig Enders¶

Neuroscience¶

Bookheimer Group - Hippocampal modulators of delayed recall: Human Connectome Project in Aging at UCLA¶

Research Group: Bookheimer Group

Principal Investigator: Susan Bookheimer

Graduate Student: Tyler J. Wishard

Affiliation: Psychiatry & Biobehavioral Sciences

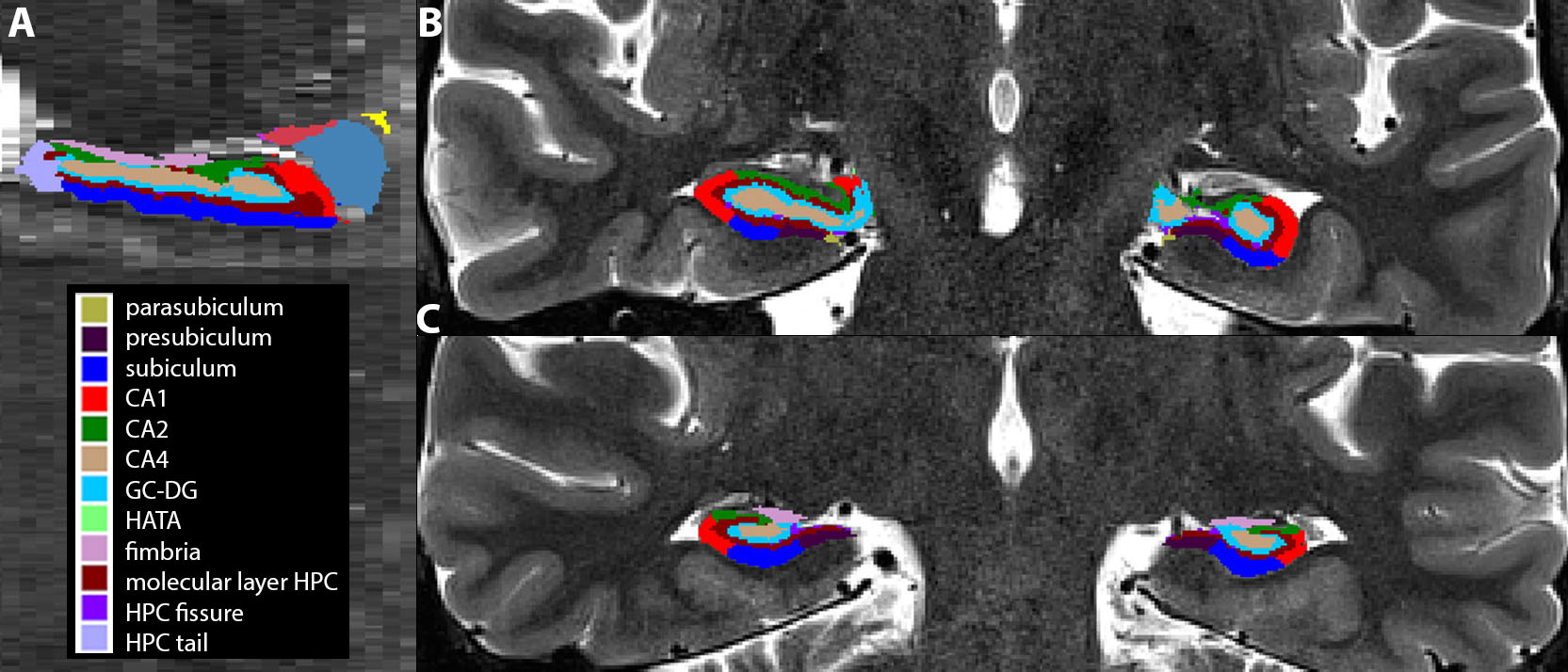

About: "The hippocampus, a vital brain region for memory processing, is composed of distinct subfields. In this study, we investigate hippocampal subfield volume alterations at different ages and assessed the correlations with memory capabilities. 278-participants aged 36-96 years were collected as part of the Mapping the Human Connectome During Typical Aging Study at UCLA (mean age = 58.4 years, 57.9% female). We used a 3-Tesla MRI scanner to acquire a MPRage T1-weighted image for visual reference during anatomical segmentation obtained using Freesurfer version 6.0 software (Iglesias et. al, 2015). Memory was measured using the Rey Auditory Verbal Learning Test (Rey, 1941), which verbally presents a list of 15 unrelated words to the participant who is instructed to repeat each word recalled, with five learning trials. Following a second interference list of the same length, a delayed recall of this word list was assessed. A stepwise linear regression was performed to examine the relationship between the delayed recall score and age. We found that age strongly predicted delayed recall performance (r = -0.355, p < 0.0001), where older adults scored lower. This association is strengthened by NIH Toolbox-Fluid Composite score and left hippocampal subfield CA1 volume (R2 = 0.252). Overall, the hippocampal fissure volume increased with age, while volumes for other subfields decreased. These findings suggest that a reduction in CA1 subfield volume in conjunction with increased age and declining fluid cognition is associated with a decline in memory abilities. CA1 subfield volume could indicate risk of memory impairment across the adult lifespan." –Tyler J. Wishard, Bookheimer Group

How the Hoffman2 Cluster is facilitating this research: The Hoffman2 Cluster facilitates our team’s research by hosting the Human Connectome Project data collected at UCLA as well as several other neuroimaging consortium sites across the United States. The MRI data is preprocessed, cleaned, and analyzed on the cluster using publically-available software provided for Hoffman users. Our team benefits from the Hoffman2 Cluster’s high-computing nodes which can analyze large datasets, ideal for collaborative research projects with advanced computing needs.

Software used on the Hoffman2 Cluster: Freesurfer

Reference:

"Example participant’s hippocampal subfield parcellation using Freesurfer v6.0.0 (A) sagittal (B) anterior coronal (C) posterior coronal views of the segmented hippocampus overlaid on a high in-plane resolution MRI (T2-weighted: TR 4800; TE: 1.06; flip angle 135°; FOV 150×150; bandwidth 130 Hz/px; 0.4x0.4x2.0-mm voxels). Figure legend designates the hippocampal subfield label name and corresponding parcellation color." –Tyler J. Wishard, Bookheimer Group¶

Lenartowicz Group - Concurrent EEG & fMRI Recordings of Neural Oscillations¶

Research Group: Lenartowicz Group

Principal Investigator: Agatha Lenartowicz

Affiliation: Psychiatry & Biobehavioral Sciences

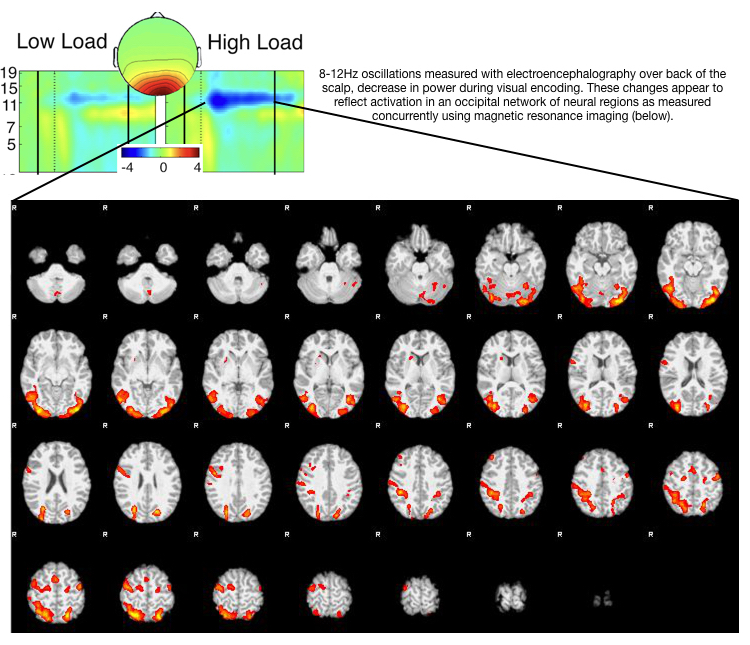

About: "The project involves concurrent collection of EEG and fMRI recording of neural signals during visual attention. The concurrent collection facilitates a more comprehensive framework of neural mechanisms that drive attention during visual encoding. Analysis, performed on the HPC cluster, involves processing, statistical assessment and cross-modal integration of these multi-dimensional data." –Agatha Lenartowicz

How the Hoffman2 Cluster is facilitating this research: A key benefit of Hoffman2 HPC is the availability of parallel computing, which drastically reduces computation time. In extreme examples our processing time was reduced from 2 months to hours.

Software used on the Hoffman2 Cluster: We leverage HPC job arrays for these analyses, as well custom neuroimaging software (FSL), and MATLAB.

Reference: Lenartowicz A, Lu S, Rodriguez C, et al., Alpha desynchronization and fronto-parietal connectivity during spatial working memory encoding deficits in ADHD: A simultaneous EEG-fMRI study <https://pubmed.ncbi.nlm.nih.gov/26955516/>. Neuroimage Clin. 2016;11:210-223. Published 2016 Feb 6. doi:10.1016/j.nicl.2016.01.023

MontiLab - Segmenting Brain Tissue in Pathological Brains¶

Research Group: Monti Lab

Principal Investigator: Martin M. Monti

Affiliation: Department of Psychology

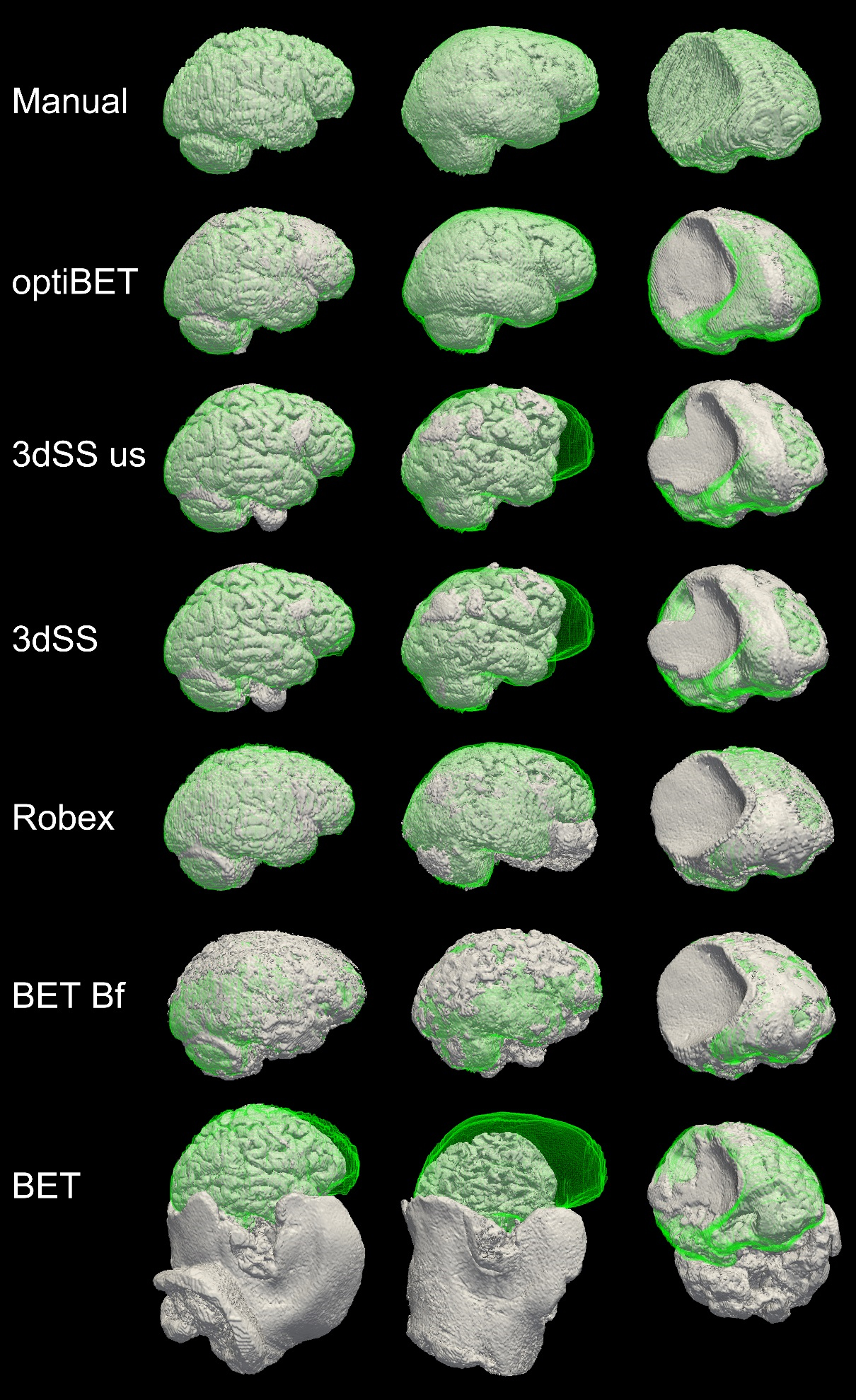

About: "To study on a large scale how severe brain injury affects brain tissues and cognition, we need software pipelines able to perform high-throughput analysis of MRI data. Existing software, however, is developed to work in healthy brains and typically fails when severe brain injury distorts the shape of a brain. In this project, we leveraged existing state-of-the-art software for MRI tissue segmentation and the computational power of Hoffman2 to search through a large space of settings and combinations of multiple software in order to create a data-driven optimized tissue segmentation pipeline robust to brain pathology – known as optiBET (Lutkenhoff et al., 2014, PLoS One) and freely available for download to the whole research community." –Martin M. Monti

How the Hoffman2 Cluster is facilitating this research: Hoffman2 allows our large volume of data to be handled and helps us manage the heavy computational footprint.

Pipeline developed to perform the study: OptiBET

Reference: Lutkenhoff ES, Rosenberg M, Chiang J, Zhang K, Pickard JD, Owen AM, Monti MM. (2014) Optimized Brain Extraction for Pathological Brains (optiBET). PLoS ONE 9(12): e115551. doi:10.1371/journal.pone.0115551

![Visual representation of the optiBET script workflow: (1) initial approximate brain extraction is performed using BET (and options ‘B’ and ‘f’, as suggested previously [5]); (2) sequential application of a linear and non-linear transformation from native space to MNI template space; (3) back-projection of a standard brain-only mask from MNI to native space; and (4) mask-out brain extraction.](../../_images/monti_workflow_graphic.jpg)

"Visual representation of the optiBET script workflow: (1) initial approximate brain extraction is performed using BET (and options ‘B’ and ‘f’, as suggested previously [5]); (2) sequential application of a linear and non-linear transformation from native space to MNI template space; (3) back-projection of a standard brain-only mask from MNI to native space; and (4) mask-out brain extraction." – Lutkenhoff et al., 2014.¶

"Rendering of brain extractions obtained from different algorithms/options (shown in grey) compared to the manually-traced benchmark (shown in green wireframe) for three sample patients with different degrees of brain pathology (little, medium, and high, for the left, middle and right columns respectively)." – Lutkenhoff et al., 2014.¶

SilvaLab - How our brain links memories across time?¶

Research Group: Silva Lab

Principal Investigator: Alcino J. Silva

Postdoctoral Researcher: Daniel Gomes de Almeida Filo, Silva Lab Member

Affiliation: Department of Neurobiology

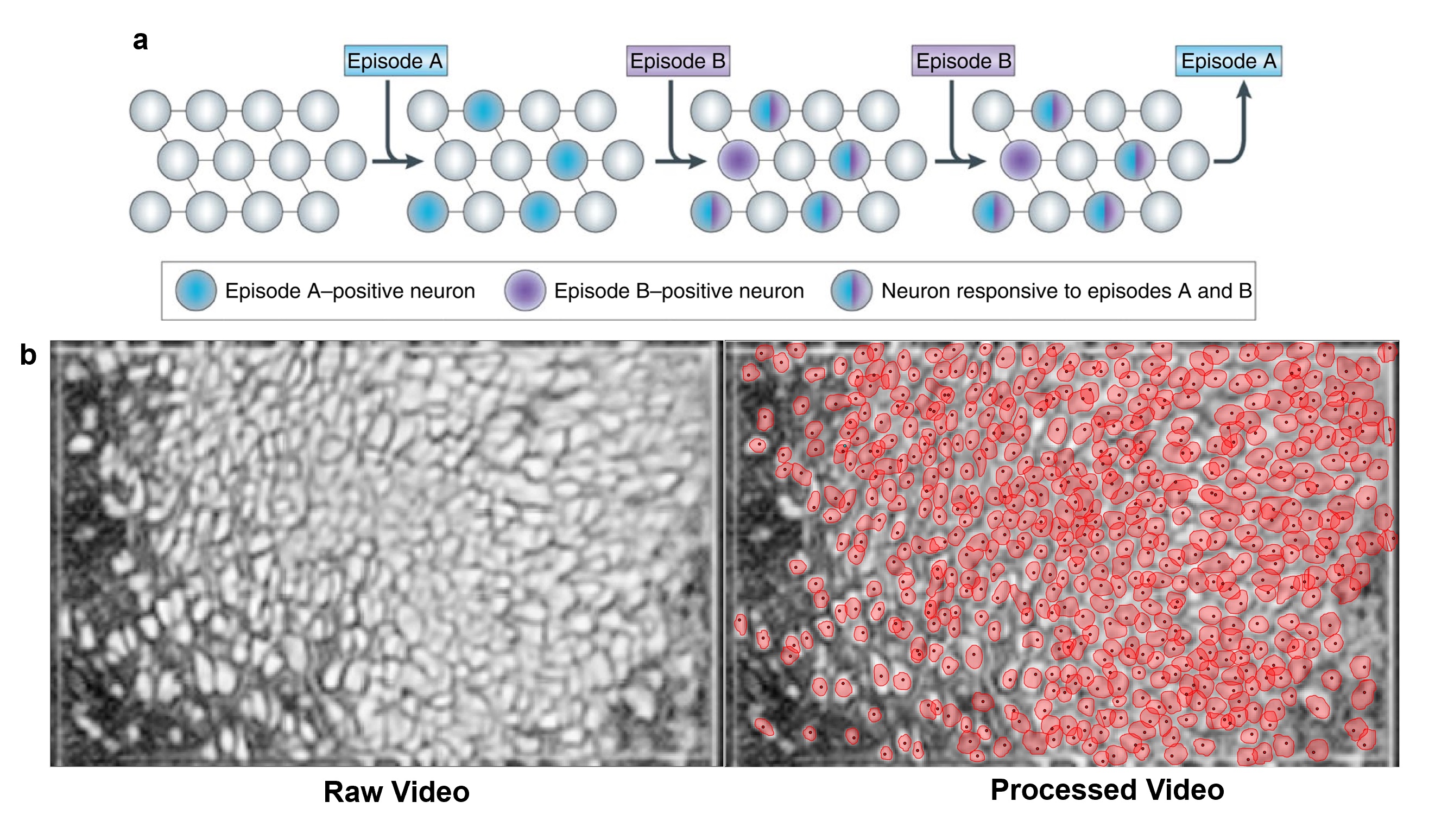

About: "Our lab is using the advanced computing properties of the Hoffman cluster to process movies of brain activity filmed with head-mounted fluorescent microscopes that are implanted in a mouse’s heads to measure neuronal activity as the mice are involved in learning and memory tasks. What used to take us days of painstaking analyses in traditional computers we can analyze in hours in the Hoffman 2 cluster. With this advanced computing abilities, we have been able to unravel how the neuronal mechanisms underlying how our brain links memories across time." –Daniel Gomes de Almeida Filo, Silva Lab Member

How the Hoffman2 Cluster is facilitating this research: The biggest contribution of the Hoffman 2 cluster to our type of analysis is to be able to issue multiple jobs at the same time to very powerful computers. The analysis of videos for the automatic detection and projection of the activity of neurons is a heavy computational task and demands high computational power, parallel computing, and memory availability.

Software used on the Hoffman2 Cluster: MATLAB

Reference: Silva Lab

"a) Memories that are encoded close in time are represented by overlapping neural populations as a result of learning-related increases in excitability. This shared ensemble of neurons may represent the neuronal basis for linking memories through time as depicted by the model. b) Videos recorded from the brain of freely behaving mice are processed for the automatic detection of neurons and projection of their activity. This heavy computational processing takes advantage of the Hoffman 2 Cluster." –Daniel Gomes de Almeida Filo, Silva Lab Member¶